XenServer: Under the hood

One thing you notice online when working in virtualisation is the lack of resource explaining the engines / drive trains / gear boxes what go into making system virtualisation possible. This actually goes for a lot of things, there will always be more howto’s on technical webserver stuff for Linux than for Windows.

Today I am going to explain a bit about the relationship between processes and objects that come together to make your virtual machine work.

So to start off, what is a virtual machine in essence?

The answer is simple:

A virtual machine is just a container (piece of software [Note, we use the word container from here on to represent that “virtual world” the guest OS lives in]) that an alternative OS sits inside that appears to have all those same interfaces as a motherboard / bios / HDD etc etc.

The easiest way I think about OS virtualization is thinking back to grade six when I was fiddling with VisualBoy Advanced (http://vba.ngemu.com/) it’s just a lot more advanced, but the concept is the same…

So, the short version is: its a piece of software that emulates hardware…

Before I do delve into details about these processes and objects, I want to quickly touch on the two main types of virtualization, these are:

1) HV — Hardware Virtualization

2) PV — Paravirtualization

(from here on, we will just refer to these as PV and HV)

So what exactly are these?

HV:

in full virtualization , the virtual machine simulates enough hardware to allow an unmodified “guest” OS (one designed for the same instruction set) to be run in isolation.

This approach was pioneered in 1966 with the IBM CP-40 and CP-67 predecessors of the VM family.

Examples outside the mainframe field include Parallels Workstation, Parallels Desktop for Mac, VirtualBox, Virtual Iron, Oracle VM, Virtual PC, Virtual Server, Hyper-V, VMware Workstation, VMware Server (formerly GSX Server), QEMU, Adeos, Mac-on-Linux, Win4BSD, Win4Lin Pro, and Egenera vBlade technology.

— source: http://en.wikipedia.org/wiki/Hardware_virtualization

PV:

A hypervisor provides the virtualization abstraction of the underlying computer system. In full virtualization, a guest operating system runs unmodified on a hypervisor.

However, improved performance and efficiency is achieved by having the guest operating system communicate with the hypervisor.

By allowing the guest operating system to indicate its intent to the hypervisor, each can cooperate to obtain better performance when running in a virtual machine. This type of communication is referred to as paravirtualization.

This is just to give you a quick understanding of what the basic concepts are, now we can look under the hood!

With this demonstration and explanation my commands will be run on XenServer 5.5, so it is safe to say these are tested and working on 5.5 only, if its different on others then let me know in the comments box below!

When we look under the hood of your container we usually will find the following elements:

- VM container

- Virtual Network Interfaces

- Virtual Network

- Physical Network Interface

- Virtual Block devices (Guests HDD)

- Virtual Disk Image

- Storage Repository

- Physical Block Device

- Virtual Network Interfaces

- Host

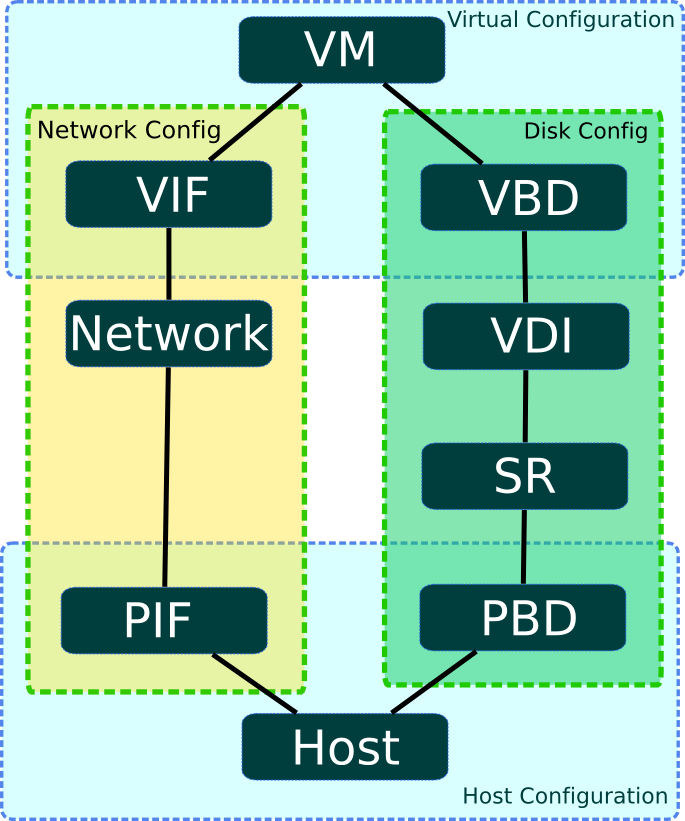

The following picture shows how this comes together:

So by looking at the image above, we can see the relationships, this is how each relationship stands:

- The VM has a ONE TO MANY relationship with both VBDs and VIFS (a VIF or VBD can only be attached to one VM, but many VIFS and VBDs can be attached to a VM)

- The VBD has a ONE TO ONE relationship with the VDI process.

- The VDI has a MANY TO ONE relationship with the SR process (many VDI’s can reside on a single SR but a VDI may never be on more than one SR)

- The SR has a ONE TO ONE relationship with the PBD process.

- The PBD has a MANY TO ONE relationship with the HOST process (many PBD’s can reside on a single host, but a PBD may never reside on more than one host)

- The VIF has a MANY TO ONE relationship with the NETWORK process (many VIF’s can be attached to a single network, but a VIF may only be attached to a single network at any time)

- The NETWORK has a MANY TO MANY relationship with the PIF process (typically a network will only be connected to one PIF at a a time, but it is possible to bond PIFs)

- The PIF has a MANY TO ONE relationship with the HOST process (many PIF’s can reside on the HOST, but a PIF may only be on a single host)

So this should provide you with a relational Diagram toward what the relationship constraints are.

So what are each of these processes anyways?

- The VM (Virtual Machine) process is the container in which your guest OS abstracts itself partially or completely from the HOST system, this partially or completely is founded by whether or not you choose PV or HV modes.

- The VIF (Virtual Network Interface / Virtual Interface) process is the containers method for presenting to the guest OS which is perceives as physical network interface cards on the PCI level.

- The Network process is a process that resides in the host environment that connects multiple VIF interfaces together to for a logical bus (bridge) on the backend inside the resident HOST, this is also useful for pool operations where VLANs may need to be tagged or communication between resident hosts may need to be achieved (ie, in a pool)

- The PIF (Physical Network Interface / Physical Interface) process is the process that controls the physical network interface cards on a resident HOST, these PIFs maybe connected into multiple networks to then bridge onto multiple VIFs.

- The VBD (Virtual Block Device) is the communication layer between the VDI and the VM, its used to present the VDI as a physical disk to the guest OS, it is also used to abstract the VDI away from the guest VM.

- The VDI (Virtual Disk Image) is the process that accesses the flat file contained on the SR of your HDD, this is where your data is written and controlled.

- The SR (Storage Repository) is the process that links VDI’s to their resident PBD, it provides controls and communicates between physical storage and VDI ‘s.

- The PBD (Physical Block Device) is the process that communicates with the physical storage (whether that be HDD’s or ISCSI connections to SAN infrastructure or even InfiniBand) it communicates using the resident host and provides a communication layer to the SR processes.

- The HOST is the physical resident computer that your VM resides on, this is the physical machine and is responsible for controlling all the other processes residing on the resident.

So this is just a quick run down of whats under the hood and what keeps your VPS running nice and smoothly.

Do note that these are just the primary (core) processes that are involved in keeping your VPS running, there is about 30 more equally important processes that we did not list here for simplicity reasons.

Thanks,

Karl.

| Hosting Options & Info | VPS | Web Solutions & Services |

|---|---|---|

Pingback: The Host Network Stack | Virtual Andy()